Regulation of AI and Digital Markets: EU vs. U.S. Approaches

The rise of artificial intelligence (AI) and the rapid expansion of digital markets have created unprecedented opportunities and risks globally. AI technologies such as generative AI, machine learning, and large language models have revolutionized communication, business, and public administration, but they have also raised concerns about privacy, security, and ethical governance. In this context, the European Union (EU) and the United States (U.S.) have adopted fundamentally different approaches to AI regulation and data governance. While the EU has embraced a precautionary, regulatory-first model epitomized by the AI Act and the General Data Protection Regulation (GDPR), the U.S. relies primarily on a market-driven, innovation-first framework with fragmented sectoral regulations. These contrasting approaches reflect deeper political, economic, and cultural differences and have significant implications for transatlantic competition, citizen protection, and the global AI landscape.

The European Union: Regulation and Rights

The EU has positioned itself as a global leader in digital governance through comprehensive legislation designed to regulate AI and safeguard citizen rights. The EU AI Act, adopted in 2024 and gradually entering into force by 2025–2027, represents the world’s first binding, risk-based framework for AI. The Act categorizes AI systems according to their risk profiles. Systems considered to pose an “unacceptable risk,” such as social scoring, mass surveillance, and manipulative biometric applications, are banned outright. High-risk systems, including critical infrastructure, law enforcement applications, and certain recruitment tools, are subject to strict obligations such as transparency, human oversight, and robust risk management. Low-risk systems, such as chatbots, require minimal transparency measures, such as notifying users that they are interacting with AI.

The AI Act is complemented by the GDPR, which enshrines data protection as a fundamental human right. GDPR’s principles—consent, data minimization, purpose limitation, and the right to access and delete personal information—provide a strong privacy framework. Companies must implement data governance measures, conduct impact assessments, and can face fines up to four percent of global turnover for violations. This combination of AI-specific and general data regulations reflects the EU’s “regulate first, innovate later” philosophy. The EU emphasizes ethical AI, citizen protection, and corporate accountability, even if such regulations slow the pace of technological deployment. Enforcement mechanisms, including national supervisory authorities and a centralized EU AI office, ensure compliance and demonstrate the bloc’s intent to shape global norms—a phenomenon known as the “Brussels Effect.”

The United States: Innovation-First and Market-Driven

In contrast, the U.S. approach to AI regulation prioritizes technological innovation and economic competitiveness. There is currently no federal AI law comparable to the EU AI Act. Instead, governance is fragmented across federal and state agencies. The Biden administration has issued executive orders and guidance documents, such as the 2023 National AI Initiative and the NIST AI Risk Management Framework, which emphasize voluntary standards and industry collaboration. State-level legislation, including California’s privacy laws and Illinois’ biometric information regulations, addresses some specific risks but lacks a coherent national framework.

The U.S. model is grounded in the belief that excessive regulation may stifle innovation and weaken the country’s competitive edge, particularly against China in the global AI race. Tech giants such as OpenAI, Google, and Meta exert significant influence through lobbying and voluntary compliance programs. Unlike the EU, the U.S. primarily regulates AI with a focus on national security, competition, and public safety rather than human rights or ethical considerations. Consequently, the American market fosters rapid technological experimentation and deployment, often at the cost of comprehensive citizen protections or uniform standards.

Comparative Analysis: Privacy and Data Governance

Privacy norms represent another major divergence between the EU and the U.S. The EU treats personal data as a fundamental right, enforcing strict consent requirements and limitations on data processing through GDPR. Individuals can access, correct, or erase their data, and companies face steep penalties for non-compliance. By contrast, the U.S. treats personal data as a commercial asset, with limited federal oversight. Data collection, profiling, and monetization are central to the business models of American tech companies. While certain sector-specific regulations exist, and states like California have introduced partial analogues to GDPR (e.g., CCPA), these measures are weaker and lack the comprehensive enforcement mechanisms present in Europe.

The differences in privacy governance have broader implications for AI deployment. In the EU, AI systems must be designed with privacy by default and undergo rigorous risk assessments. In the U.S., companies can leverage vast datasets for AI training and experimentation with fewer constraints, enabling faster innovation but exposing citizens to potential misuse, bias, and surveillance. These contrasting models reflect deeper cultural and political norms: the EU prioritizes citizen protection and ethical oversight, whereas the U.S. emphasizes economic growth and technological leadership.

Implications for Transatlantic Relations and Global AI Governance

The divergence in regulatory approaches has created both opportunities and challenges for transatlantic relations. European regulations force U.S. companies to adapt their technologies and business models when operating in Europe, often leading to a global adoption of EU-inspired standards. At the same time, U.S. market-driven policies enable faster AI innovation, prompting European debates about whether the AI Act may hinder technological competitiveness. Conflicts have emerged over data transfers, such as the invalidation of the Privacy Shield agreement, highlighting tensions between privacy protection and commercial interests.

Additionally, these differences affect global AI governance. The EU positions itself as a normative leader, exporting ethical standards and influencing international regulations, while the U.S. model promotes innovation-led governance, often setting de facto technological benchmarks. The transatlantic gap raises questions about international coordination on AI safety, algorithmic transparency, and digital market fairness. As AI becomes increasingly central to economic, military, and societal systems, the ability of Europe and the U.S. to harmonize or reconcile their approaches will have far-reaching consequences for global digital governance.

Conclusion

The contrasting approaches of the EU and U.S. to AI regulation and digital market governance reflect fundamental differences in political culture, economic priorities, and views on individual rights. The EU emphasizes precaution, human-centric AI, and privacy protection through the AI Act and GDPR, aiming to safeguard citizens even at the cost of slower innovation. The U.S. favors a market-driven, innovation-first model, relying on voluntary guidelines and sectoral regulations, prioritizing technological leadership and economic competitiveness. These divergent frameworks shape how AI develops, how personal data is treated, and how transatlantic relations and global digital governance evolve. Understanding the differences and potential intersections between these models is crucial for policymakers, businesses, and scholars seeking to navigate the opportunities and risks of AI in the coming decade.

The World in Focus: Highlights from Foreign Affairs’ Best Books of 2025

2025: A World Without Resolution

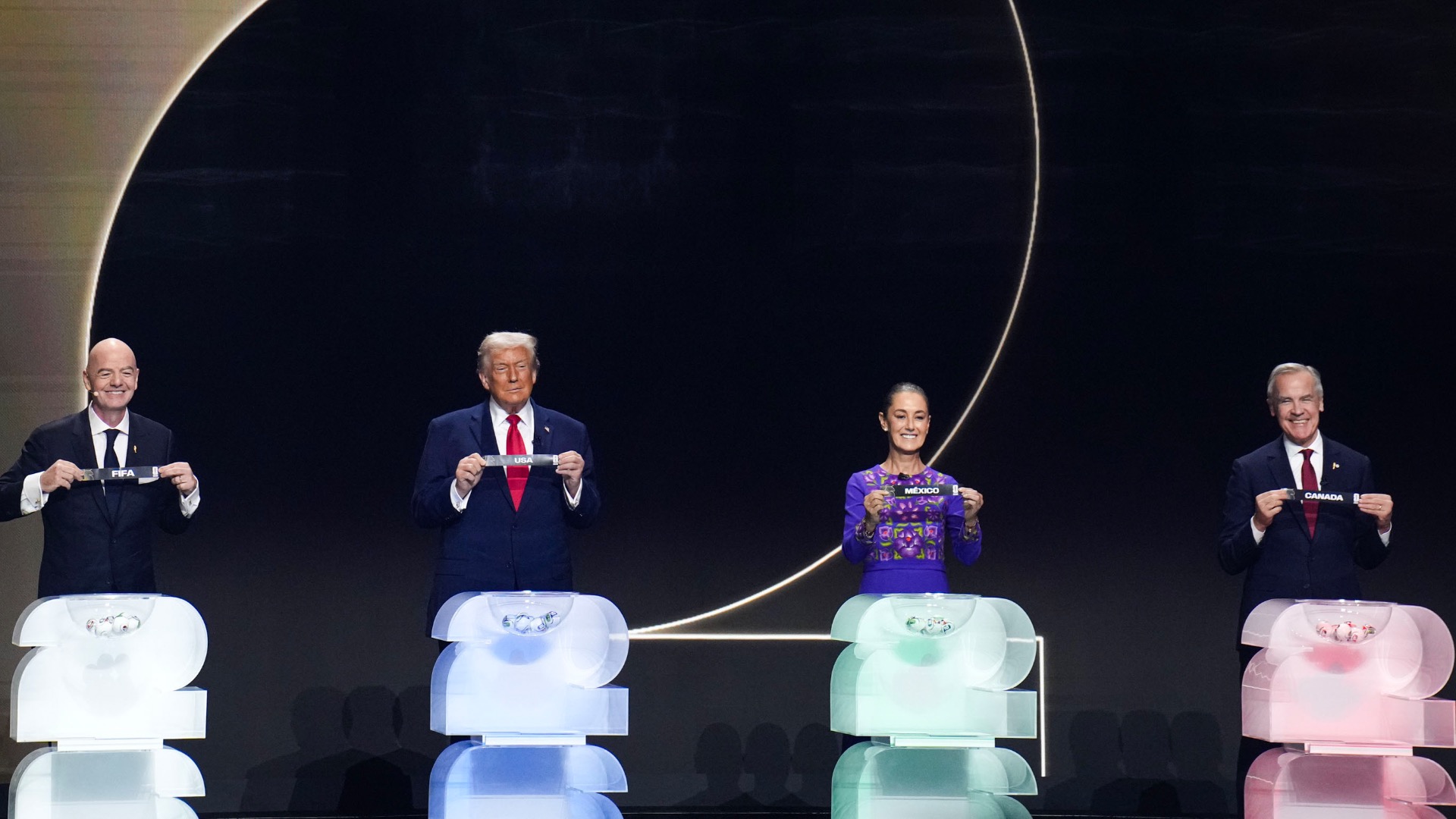

The U.S.-Venezuela Limited War of 2025: A Legal and Strategic Assessment